Just as biological nerve cells are the basic building block for our brain, artificial neurons are the basic building block for artificial neural networks and artificial intelligence based on them. Deep learning and other successful variants of artificial intelligence are based on this basic building block. But how is such an artificial neuron actually constructed? And how close are these artificial nerve cells to their biological models?

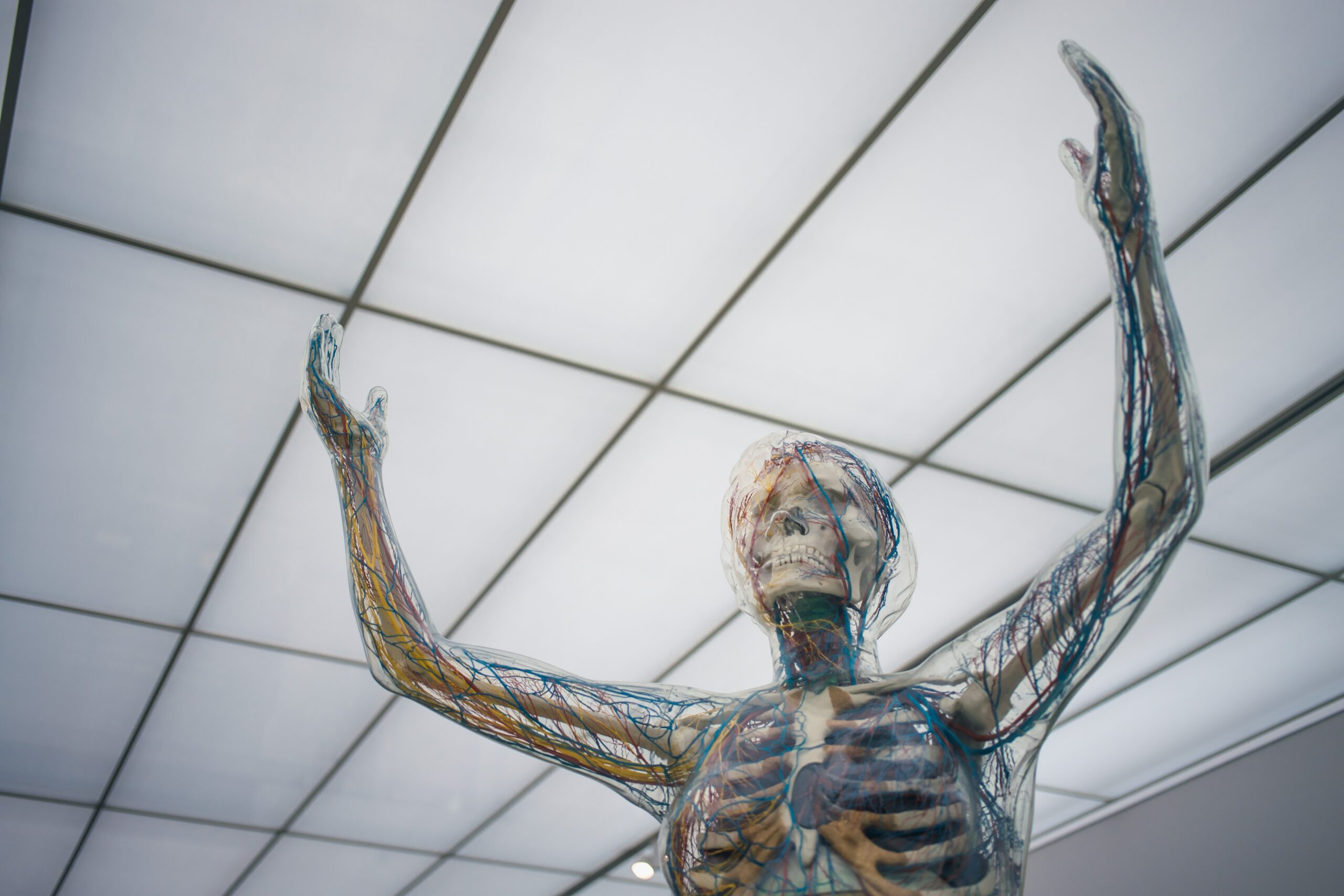

Structure of biological neurons

For this it is worth taking a brief look at biological neurons. Their main task is to take in information, process it and, if necessary, pass it on. Certain sub-areas of the neuron are of particular importance for each of these sub-functions. A nerve cell can be roughly divided into four parts: the dendrites, the cell body – also known as the soma – the axon and the synapses the dendrites are primarily responsible for information acquisition. The axon takes care of the transmission and the synapses are responsible for the transmission to other neurons. The cell body helps to process the information, but is primarily responsible for the metabolism of the nerve cell.

Simplified structure of a biological nerve cell. Not all components are also used in the artificial neuron. according to: Unknwon author, CC BY-SA 3.0, via Wikimedia Commons Information?

Much has now been said about information – but what does that actually mean in a nerve cell context? Like every cell in the body, every neuron has a so-called membrane potential. This means that there is a small electrical voltage between the outside and inside of the cell membrane. Neurons can independently modify this voltage and thus store and forward information. Exactly what these voltage differences represent depends on the context of the neuron. For example, for a neuron in visual brain areas, an action potential could represent that a particular stroke is blue or horizontally oriented. For a nerve cell involved in hearing, the action potential could mean the presence of a specific pitch.

When a neuron “decides” that the information it receives is to be passed on, the information is transmitted down the axon from the cell body to the synapses using an action potential. This "decision" and the forwarding follows the so-called "all or nothing principle". Either the decision is made not to forward the incoming information: Then nothing happens. Or an action potential is triggered at the junction between cell body and axon. This results in a membrane potential reversal that spreads throughout the axon.

Information transfer between nerve cells

While the transmission of information within a nerve cell is electrical, it is chemical between neurons. So-called neurotransmitters are released by the first neuron and diffuse across the small gap to the dendrites of the second neuron. There they activate special receptors and thereby change the membrane potential. This change is then passed back to the junction between the cell body and the axon, where a certain threshold value is used to decide whether an action potential will be triggered or not. This threshold corresponds to a minimum magnitude of change in membrane potential. If this is not reached, no action potential is triggered and the information that has arrived is not passed on.

And artificial neurons?

A “simple” artificial neuron mathematically mimics the components of a biological neuron just mentioned. There is a so-called transfer function and an activation function. The transfer function mimics the uptake of information in the dendrites, while the activation function simulates the all-or-nothing decision for (or against) an action potential. The axon is no longer needed in its biological sense, since it is primarily responsible for overcoming the physical distances in the brain. However, the information can simply be temporarily stored in the computer and does not have to be transported anywhere.

Overview of artificial neuron, adapted from: Chrislb, CC BY-SA 3.0, via Wikimedia Commons

Only one property is considered by the synapse, called synaptic strength. This is a basis of biological (and artificial!) learning and corresponds to the magnitude of the membrane potential change when neurotransmitters reach the dendrites of the postsynaptic neuron. In the artificial neuron, the synaptic strength is the so-called weighting of the incoming signals. If an artificial nerve cell or an artificial neural network is to learn, it is these "weights" that are adjusted so that the calculation in the neuron or network becomes better and better.

Classic artificial nerve cells

A classic variant is to simply use a weighted sum for the transfer function, i.e. to add up the incoming signals in relation to their synaptic strength. In the activation function, a function is then used which maps this sum to the number zero or one using a threshold value. This mimics the all-or-none principle, in which zero represents no activity and one represents an action potential.

To be precise, the artificial neuron doesn't even exist. Depending on which aspect you want to simulate in each individual case, different models can be used. If you stick to the idea of an artificial neuron described above, you can vary the transfer and activation functions used, for example. For detailed models, you can use the three-dimensional propagation of neurons model and their physical properties are based as closely as possible on those of biological nerve cells. If, on the other hand, one is not really interested in how the action potentials come about, but rather in their temporal summary, there are certain random processes that can imitate the occurrence well – without a simulation of the underlying biology.

So what can you do with an artificial neuron?

Even if a single neuron can already learn and solve certain problems, neurons only become particularly effective when they are integrated into a neural network – this applies to biological neurons as well as to artificial ones. But that's a topic in itself…

how do you see it? Do you think that neurons can really be reproduced artificially?